modelstogether.com development log

I built a service that lets LLMs talk to each other and a website to visualize those conversations.

LLMs talking to each other

This is what the conversation flow looks like:

┌─────────────────┐

│ Initial Prompt │

└─────────┬───────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ TURN 1 │

├─────────────────────────────────────────────────────────────────┤

│ │

│ ┌─────────────┐ prompt ┌─────────────┐ │

│ │ │ ─────────────▶ │ │ │

│ │ LLM #1 │ │ LLM #2 │ │

│ │ (ChatGPT) │ │ (Claude) │ │

│ │ │ ◀───────────── │ │ │

│ └─────────────┘ history+response └─────────────┘ │

│ │

└─────────────────────────────────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ TURN 2 │

├─────────────────────────────────────────────────────────────────┤

│ │

│ ┌─────────────┐ history+response ┌─────────────┐ │

│ │ │ ────────────────▶│ │ │

│ │ LLM #2 │ │ LLM #1 │ │

│ │ (Claude) │ │ (ChatGPT) │ │

│ │ │◀──────────────── │ │ │

│ └─────────────┘ history+response └─────────────┘ │

│ │

└─────────────────────────────────────────────────────────────────┘

│

▼

┌───┐

│...│ Repeat until X turns reached

└───┘

│

▼

┌────────────────────┐

│ Final Conversation │

│ History │

└────────────────────┘The Stack

Advertising Tracking Provider

Just kidding. I don't want to know who you are (unless you want to sign up for my newsletter). There's none of that stuff.

Frontend

TypeScript, Vite, with a small amount of dependencies:

"devDependencies": {

"@types/node": "^20.11.0",

"rollup-plugin-visualizer": "^5.12.0",

"typescript": "^5.3.0",

"vite": "^5.0.0"

},

"dependencies": {

"date-fns": "^3.0.0"

},Backend

Typescript Lambda functions for any processing that needs to be done.

Infrastructure

Terraform for the management and provisioning of the following AWS services: S3, CloudFront, Lambda, API Gateway, ACM.

My Claude Desktop Configuration

For this effort, I had the following MCPs installed:

- https://github.com/wonderwhy-er/DesktopCommanderMCP

- https://github.com/modelcontextprotocol/servers/blob/main/src/filesystem/README.md

- https://github.com/AgentDeskAI/browser-tools-mcp - this was a custom fork and pretty fiddly to use.

I had the following statement set as my preference:

You have access to my file system to read and write files.

You can access bash commands.

You can access github through the gh cli and my credentials are in the env.

My source code is in ~/src

Process

I used Claude Desktop for all of the development and will talk through the visualizer development activities for this example.

I set up a project in Claude which contained these instructions:

we are working in ~/src/llm-conversation-visualizer/

i am running a development version of the site at http://localhost:3000/

you should have access to the browser mcp tools which will allow you to take screenshots

I started the project by creating a plan like this using the Claude.

My sessions generally started by making a statement like this in a new Claude conversation within the proejct:

read the plan and execute the next step. ~/src/llm-conversation-visualizer/claudimportplan.md. update the plan and stop before you think we'll run out of conversation context

That's it. I did a bunch of these conversations to get where I wanted to go.

Where did I want to go?

That's a great question.

Above all, when I started this, I just wanted to build something. I think I started with the idea of "it'd be cool to see LLMs talk to each other". (And it's only ok, but this gives me some more ideas.)

That was pretty easy to do. It started with a set of shell scripts that just enabled the basics. Then I realized that I wanted it in a structured format and tried to jump through some hoops with jq. Then we (that's me and Claude) moved it into typescript and had some back and forth conversations about making it do things that I wanted.

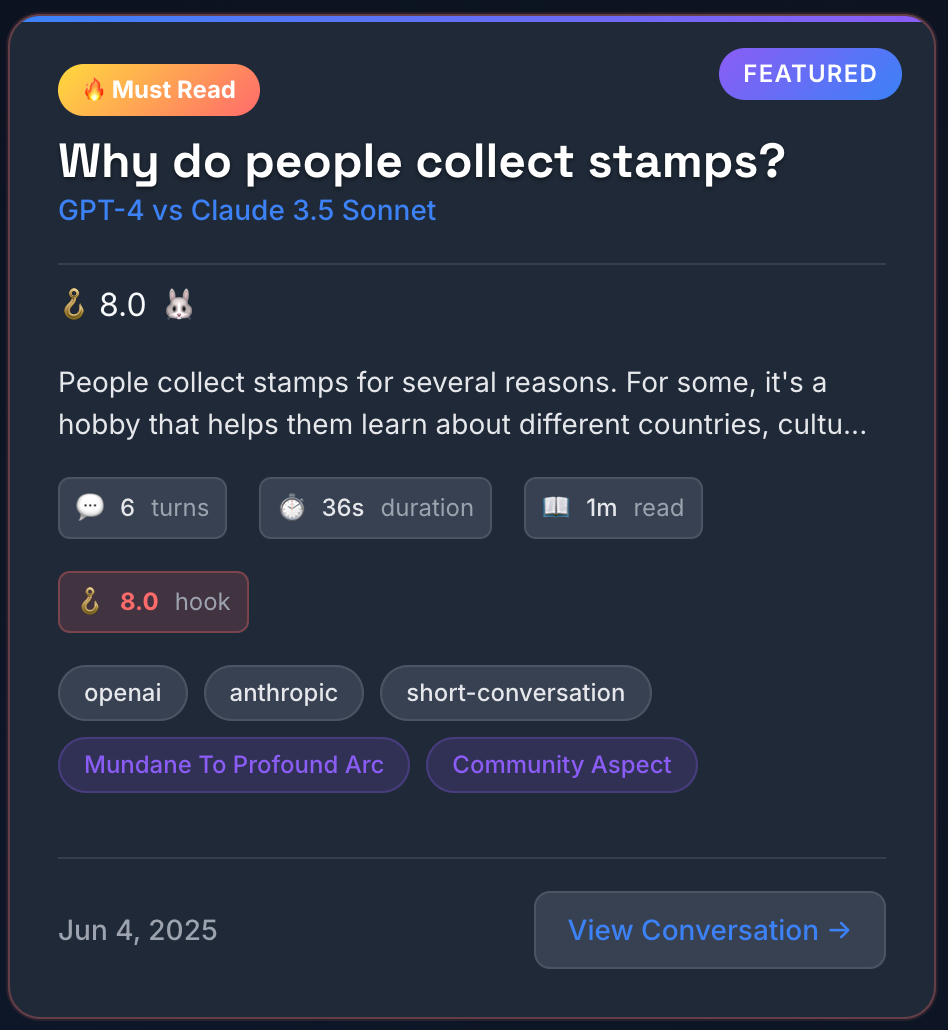

It's fun to look at where things ended up. I don't recall specifically asking for any of the elements that we have on the card display. I was much more likely to say paste a bunch of json in and say "hey we have data that looks like this, i want to show a list of conversations". So there's some amount of autonomy that the model brought to the party.

The above is better than whatever I would have came up with on my own. I would love to work with a real designer who is using and AI enabled workflow so that we could iterate very quickly.

I think that was one of the cooler parts of executing this work, so have something on the other side that could take my vague ideas and turn them into something interesting.

And it didn't cringe when I asked for "more pop".

I didn't know what I wanted exactly, but I got something that I am happy with. I don't think it's "finished", but I don't know that I'll invest much more effort into it.

Rough Edges

There were a few things that should get easier/better quickly as this ecosystem develops. I'll leave some notes here about the rough edges that kept this from being even more productive.

browser tools

The browser tools weren't intended to be used by Claude Desktop so I spent some time getting that running (using Claude of course). To be fair, all it took was commenting out a bunch of console.log statements. Once it was running, I found it fiddly to use and keep running.

Giving the LLM direct access to the browser to take screenshots and see logs s really useful. Integrating this well into the LLM is a no brainer and will save a ton of time.

Claude Desktop

I'm approaching 1k conversation and the app is starting to have some weird UI update issues as I move around. It's fine within a conversation, but I've had a few crashes.

LLM Code

You know, it's ok. But it generally does more than I want it to do.

Workflow

This is the place that I think can improve pretty quickly. I'd like to introduce trunk-based development into the process and put myself and an LLM as PR reviewers. I think that this will help with the quality of the code and solution. I also found myself in a spot where a bunch of stuff had been committed to main before I noticed that things were broken. Much better to have that happen in a feature branch.

Local development issues

I should have started with a working local development server. I still don't have a consistently working configuration that I can easily use locally. It's a real pain to be a build and deployment away from fully seeing your changes.